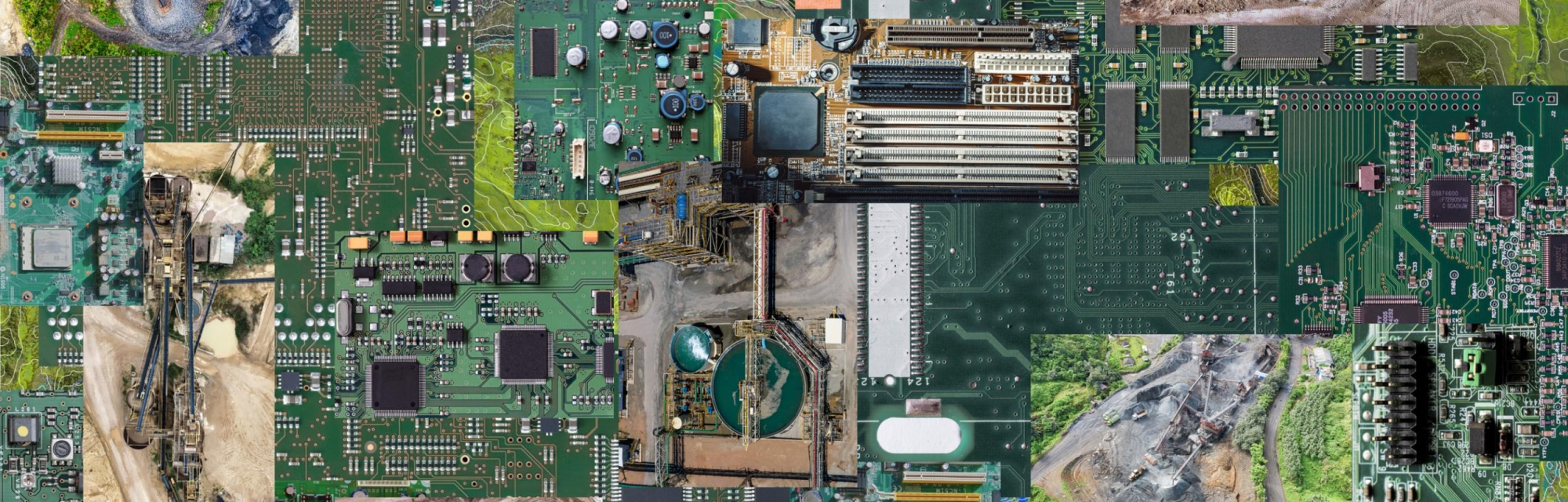

Image credits: Sinem Görücü / Better Images of AI / Deed - Attribution 4.0 International - Creative Commons

In a recent interview, Box CEO Aaron Levie described a "coding boom" driven by AI. One where the barrier between a great idea and a functional product is thinner than ever.

At Marino, we’re seeing this shift firsthand. Software is moving from being a static container for data to an active, "agentic" participant in workflows. However, as this wave of automation hits the enterprise, it brings a critical question to the surface: How do we use this new speed without losing the reliability that regulated industries demand?

The excitement around AI agents is real, but for those of us building in healthcare, finance, and the public sector, the "AI coding boom" must be tempered by a commitment to deterministic reality.

There is a fundamental technical and legal divide in how software behaves.

In a public sector welfare assessment or a clinical diagnostic tool, a "hallucination" isn't just a bug: it’s a potential violation of civil rights or a threat to patient safety. You cannot cross-examine a probability. In regulated industries, the law requires explainability. When we prioritise user safety, we have to recognise that an unpredictable result in a high-stakes environment is often legally defined as negligence.

A dangerous myth in this AI gold rush, is that offloading code generation to AI also offloads the risk. At Marino, we align our strategy with frameworks like the EU AI Act, which makes one point very clear: The developer is legally responsible for the product.

Whether a human wrote every line or an AI "co-piloted" the process, you are legally on the hook for the outcome. In the eyes of regulators, "the AI suggested it" is not a valid defence for professional negligence. This is especially vital in the public sector, where software acts as an instrument of policy. As we discussed in our look at Ireland’s new Disability Strategy, digital experiences must be inclusive, authorised, and transparent. If an automated system makes a high-stakes decision, it must be auditable, traceable, and subject to meaningful human oversight.

We believe the AI coding boom is a massive unlock for creativity. It allows us to move away from the repetitive "plumbing" of development. And to focus on high-level architecture and user experience. But we maintain a clear line between AI-assisted productivity and AI-led decision making.

We use GenAI to:

But when it comes to the core logic (the parts of the system that handle your data, your health, or your legal rights), we rely on the unwavering reliability of expert human engineering to ensure the system behaves exactly as intended.

The software of the next decade won’t just be "digitised". It will be intelligent. But for that intelligence to be useful in the real world, it must be anchored in accountability.

At Marino, we aren't just building faster; we are building more responsibly.

Are you navigating the balance between AI innovation and regulatory compliance? Get in touch and let’s discuss how to build a system that is both cutting-edge and legally resilient.

We’re ready to start the conversation however best suits you - on the phone at

+353 (0)1 833 7392 or by email